· By Bijan Bowen

Comparing the vision capabilities of GPT4o and Gemini 1.5 Pro

With the recent competition between Gemini and ChatGPT gathering a lot of attention in AI circles, I decided that I was doing myself a disservice by not testing Gemini and giving it a fair shot. While I mainly use OpenAI products for my day-to-day non-local LLM needs, I have grown weary of becoming overly dependent on any one specific offering. As I recently tested the GPT4o Vision capabilities, I figured the best way to wet my hands in the Gemini world would be to emulate the same test in a sort of head-to-head comparison between the two: Gemini 1.5-Pro-Preview-0514 vs. GPTo.

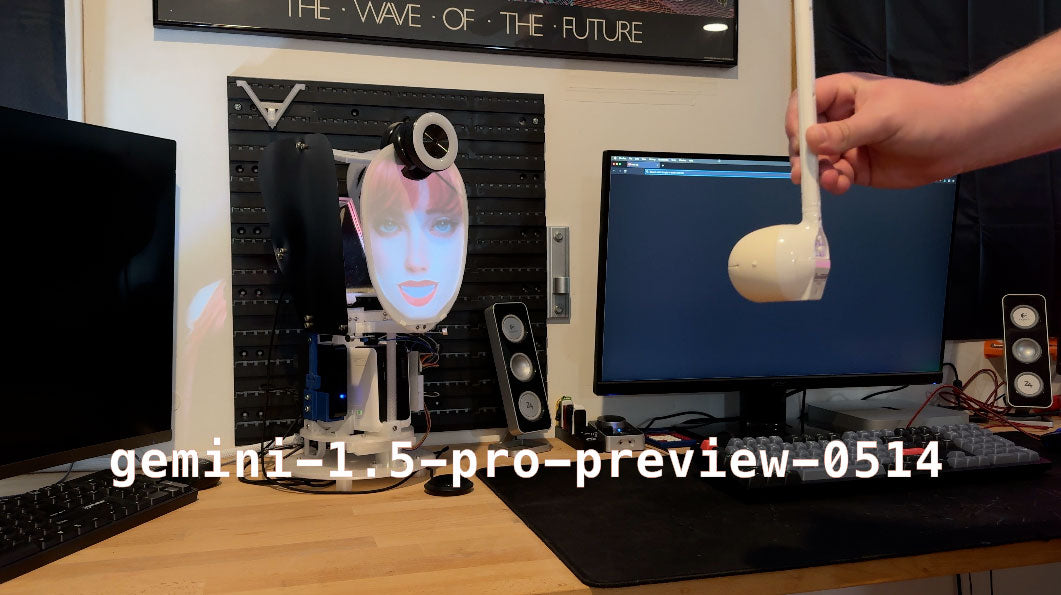

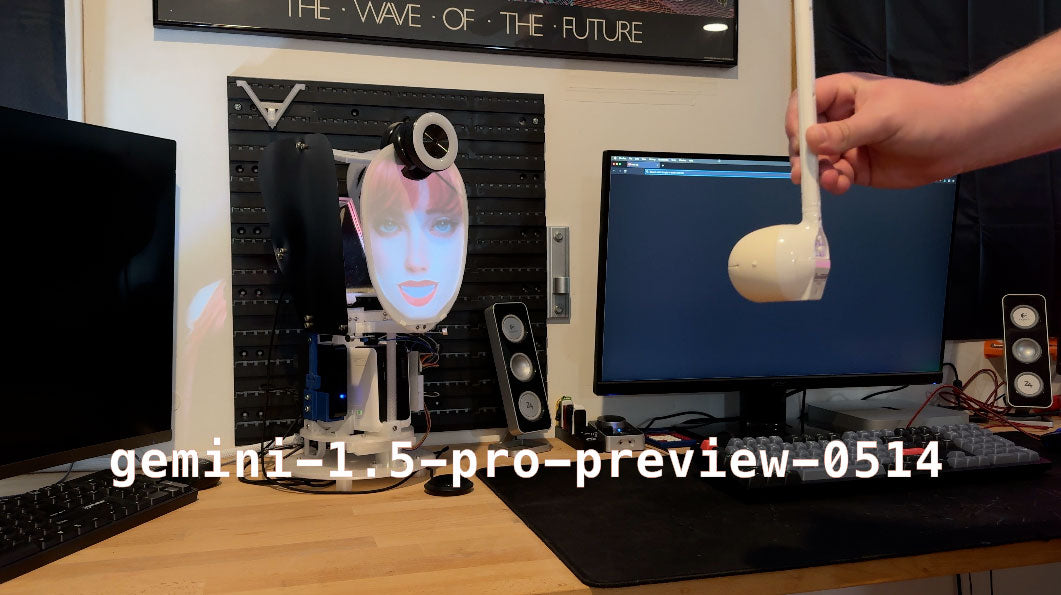

For this test, I am once again using the Ominous Industries R1 robot that I currently sell for $199, with a Razer Kiyo webcam mounted on it.

To begin, I had to decide how I would try to fairly assess the performance of both models. While I am not a scientist, I wanted to ensure that the conditions each model assessed were as similar as possible, so as not to give an unfair advantage to either. I gathered a handful of items which I presumed would provide varying levels of difficulty and began my testing.

I began by showing the models the laser module from an Xtool D1 10w laser cutting machine. As I presumed, neither of the models identified what the item was, but in this case, GPT4o seemed to do a slightly better job of identifying the specific item I was holding as it noted the heat sink and the wires coming out of the back.

Before we go further, I want to note that both of the models were also describing the surroundings of the image and were not specifically focused on only identifying the item I was holding in front of them. To get a full understanding of what the models were seeing, it is probably best to watch the video of the demonstration, as writing every difference in all the tests would likely make this article far too long. I just wanted to mention this as my testing of specific items only captures a small portion of the output the models were generating.

Next, I had an interesting challenge for them: the Otamatone, a Japanese electronic musical instrument. While both models correctly identified the Otamatone by name, GPT4o said a bit more about the actual Otamatone, specifically referring to it as a Japanese instrument. I won't say that Gemini did "worse" here, since the hard part was identifying the Otamatone, which both models correctly did. The outcome of this seems to have been related to what level of detail relating to the specific item either model decided to provide.

Next up, a dealer promo model of a 1994 Dodge Viper Roadster. In this example, Gemini clearly specified the make and model of the car, even correctly referring to it as a convertible (though Dodge did refer to it as a "Roadster"). GPT4o mentioned that I was holding a yellow toy car convertible, but it neglected to mention the make and model of the vehicle like Gemini did. I was a bit surprised at this, and in watching the video back I see I was holding the car a bit differently between the two examples, though I do not think the difference was wide enough to explain the miss from GPT4o.

Following this, a 2006 copy of "Runescape - The Official Handbook." Both models did well here, identifying the book name, and describing the main elements on the cover, such as the skeleton and "knight" sitting together. In this case, GPT4o also mentioned the subtext on the cover mentioning the inclusion of an exclusive poster inside. This was a bit surprising, and I was impressed with this. Both models did well on this, though GPT4o seems to have picked up a bit more context from the book.

Following this, I tried to change things up a bit by holding two similar, yet different, items for the image: a silver PlayStation 2 controller in the left hand and a black original Xbox controller in the right. Both models correctly identified the game controllers, although due to the way the images are taken, they mixed up which hand either controller was in. GPT4o surprised me a bit in that it not only specified which side of the image it was referring to when mentioning a specific controller, it also explained how the controller was identifiable, which was a welcome surprise.

Finally, I used two pairs of glasses: a pair of generic black safety glasses and a pair of laser goggles from the Xtool D1. Neither model correctly identified the laser goggles as such, though I was not expecting them to based on the relatively weak camera and non-optimal lighting setups. The response for the black safety glasses was similar enough, but the answers for the laser goggles were vastly different. Gemini called them black sunglasses and noted their larger size and wrap-around style. GPT4o referred to these goggles as some sort of VR or AR glasses, based on their bulk and "futuristic design."

To conclude, both models did very well, and it was a very fun and enlightening test. Surprisingly, GPT4o kept mentioning that there was a dog on or around the couch in the background of the image, which was not the case. It was reminiscent of an LLM hallucination mixed with a human sense of pareidolia. Gemini did not do this, but for some reason, it kept mentioning how "cluttered" my space was. I didn't think it was that bad, but I must now reassess my stance on what defines clean, in regards to one's workspace.

Here is the full video comparison on YouTube.

#AI #MachineLearning #AIComparison #VisionAI #GPT4 #GeminiPro #TechReview #AIResearch #ArtificialIntelligence #TechTest #AITechnology #DeepLearning #TechTrends